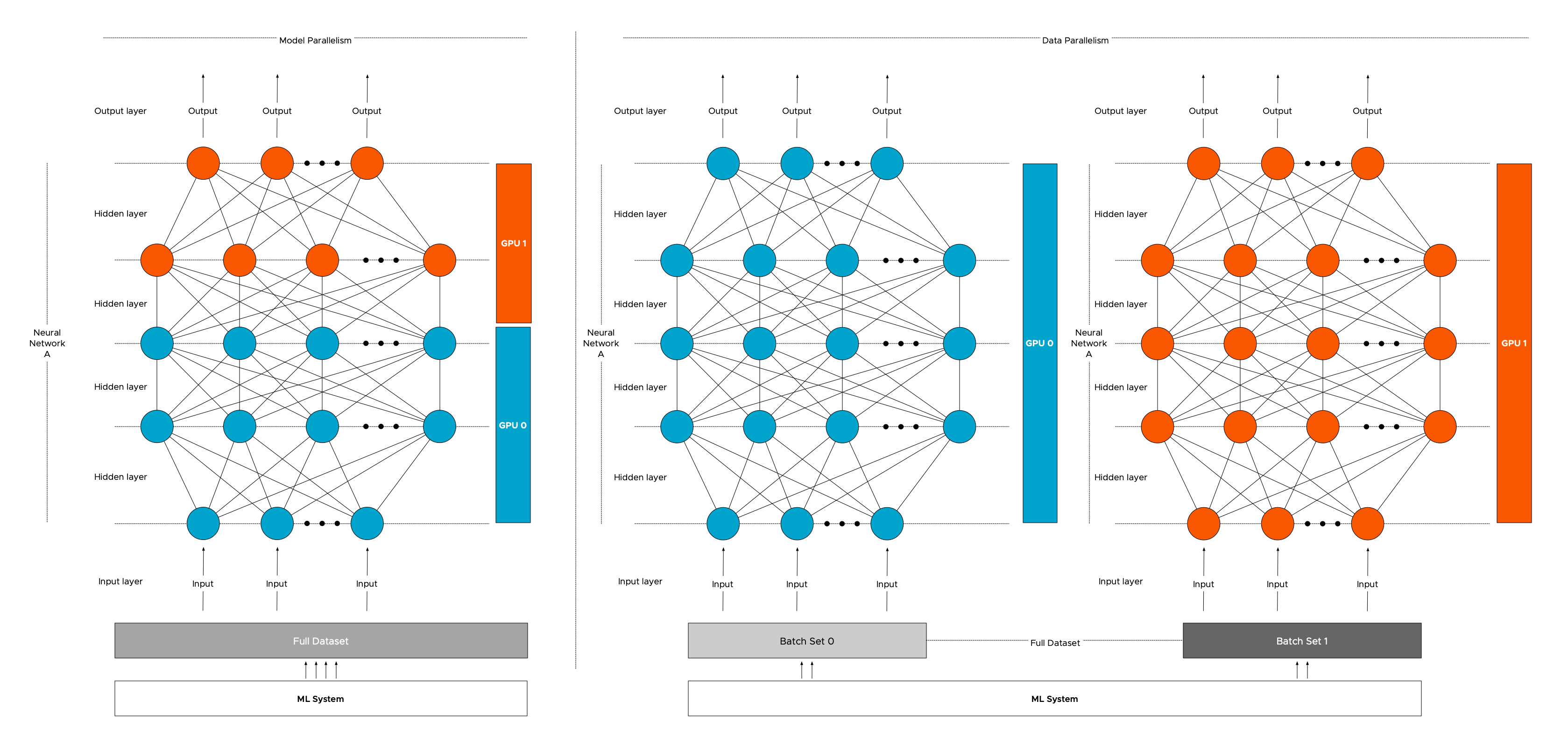

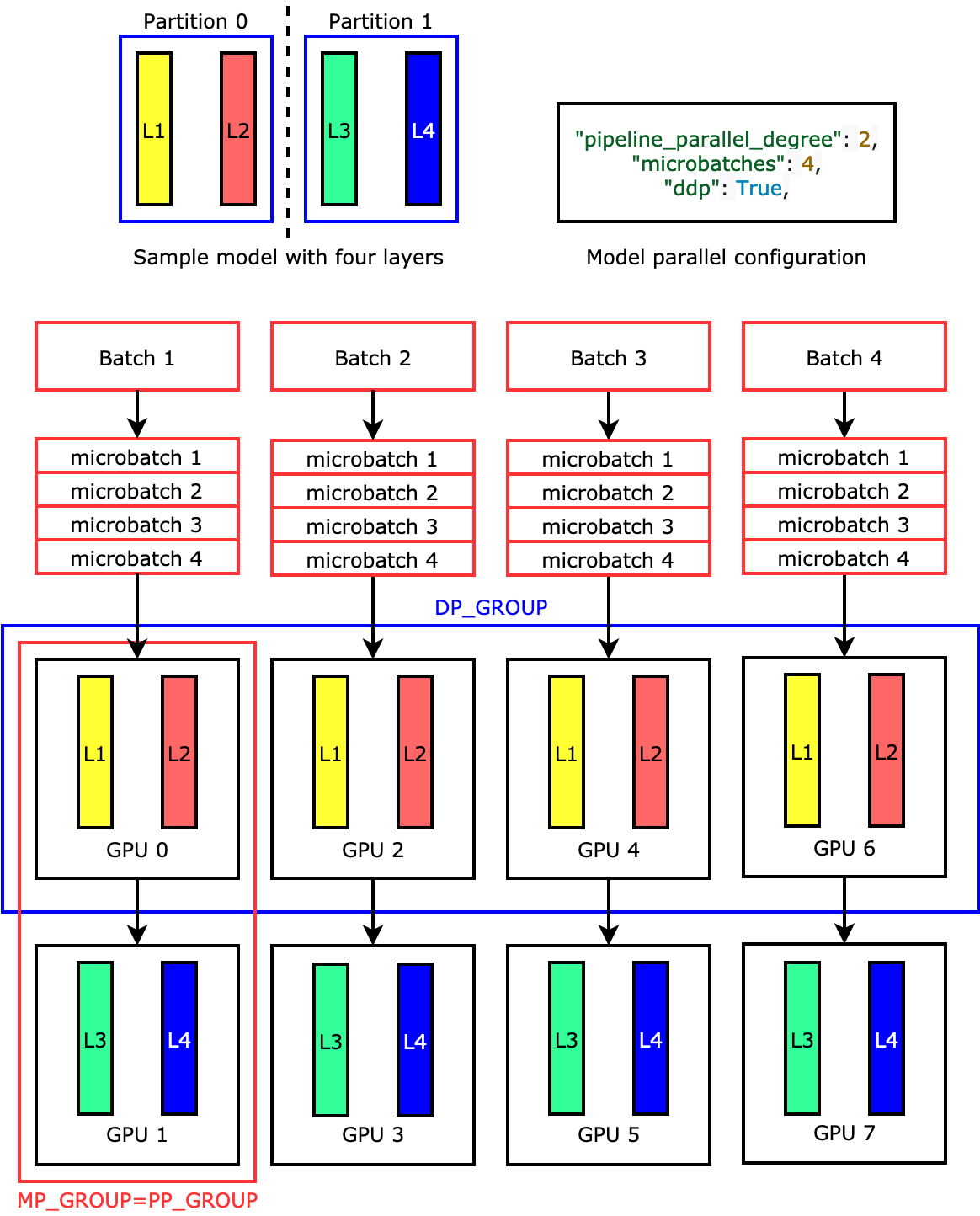

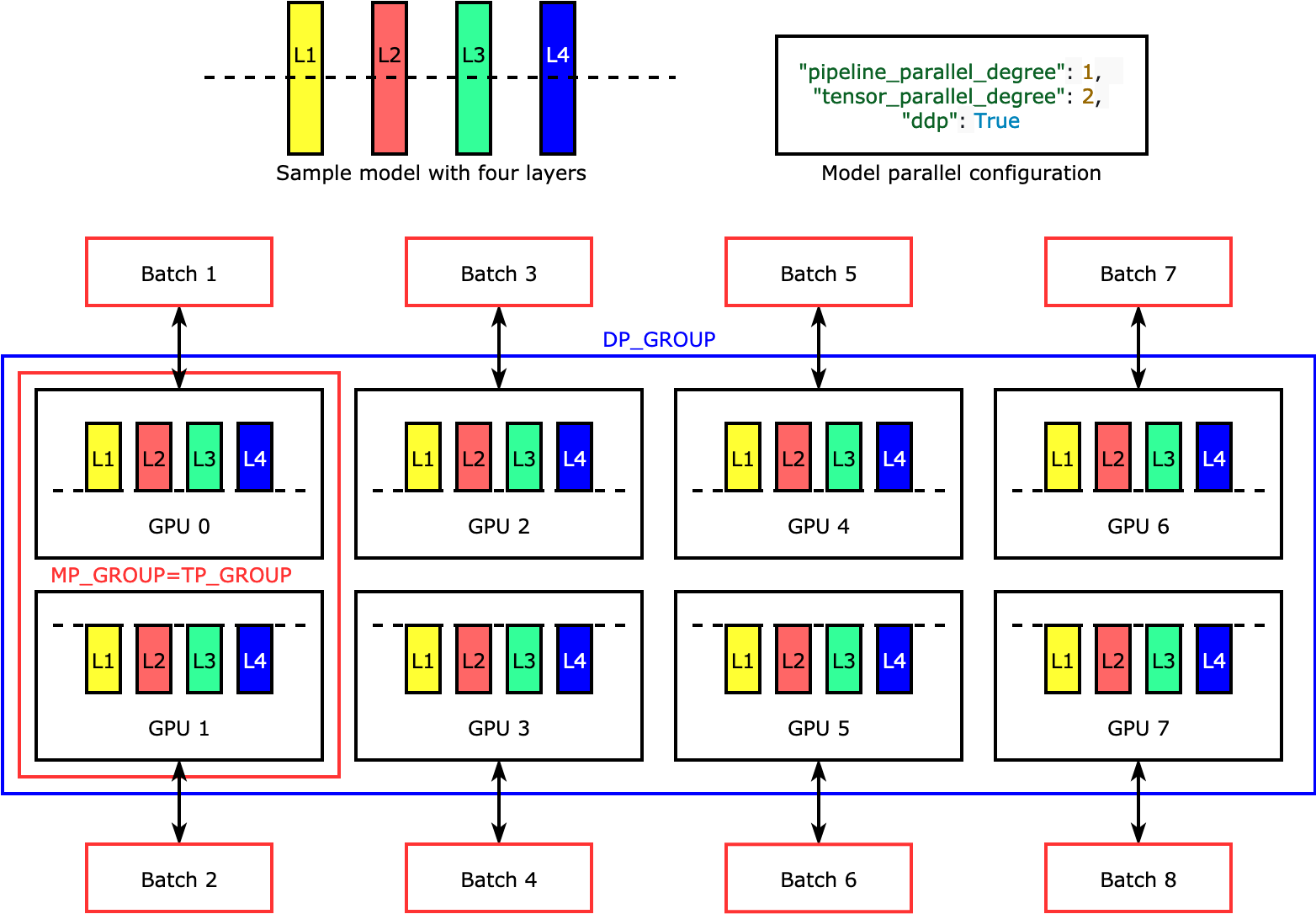

Figure 1 from Efficient and Robust Parallel DNN Training through Model Parallelism on Multi-GPU Platform | Semantic Scholar

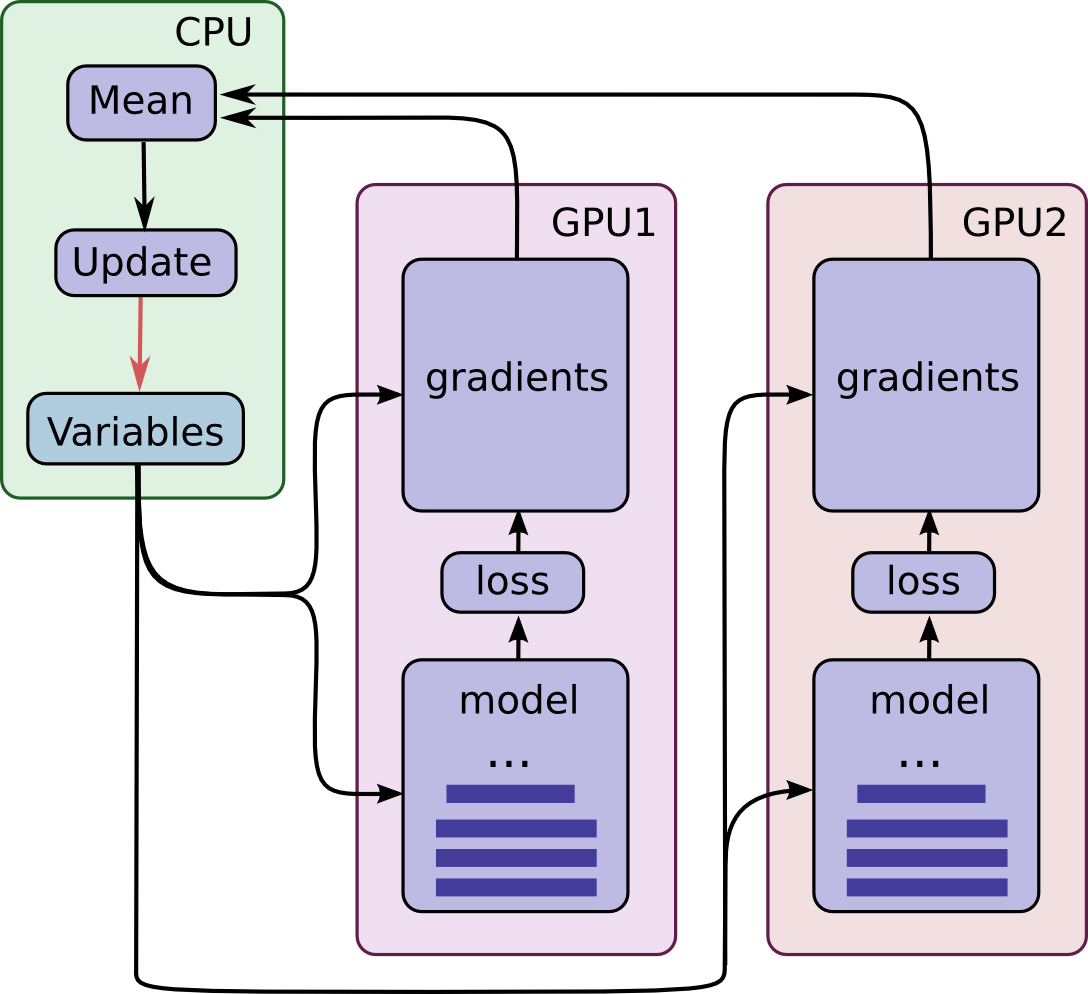

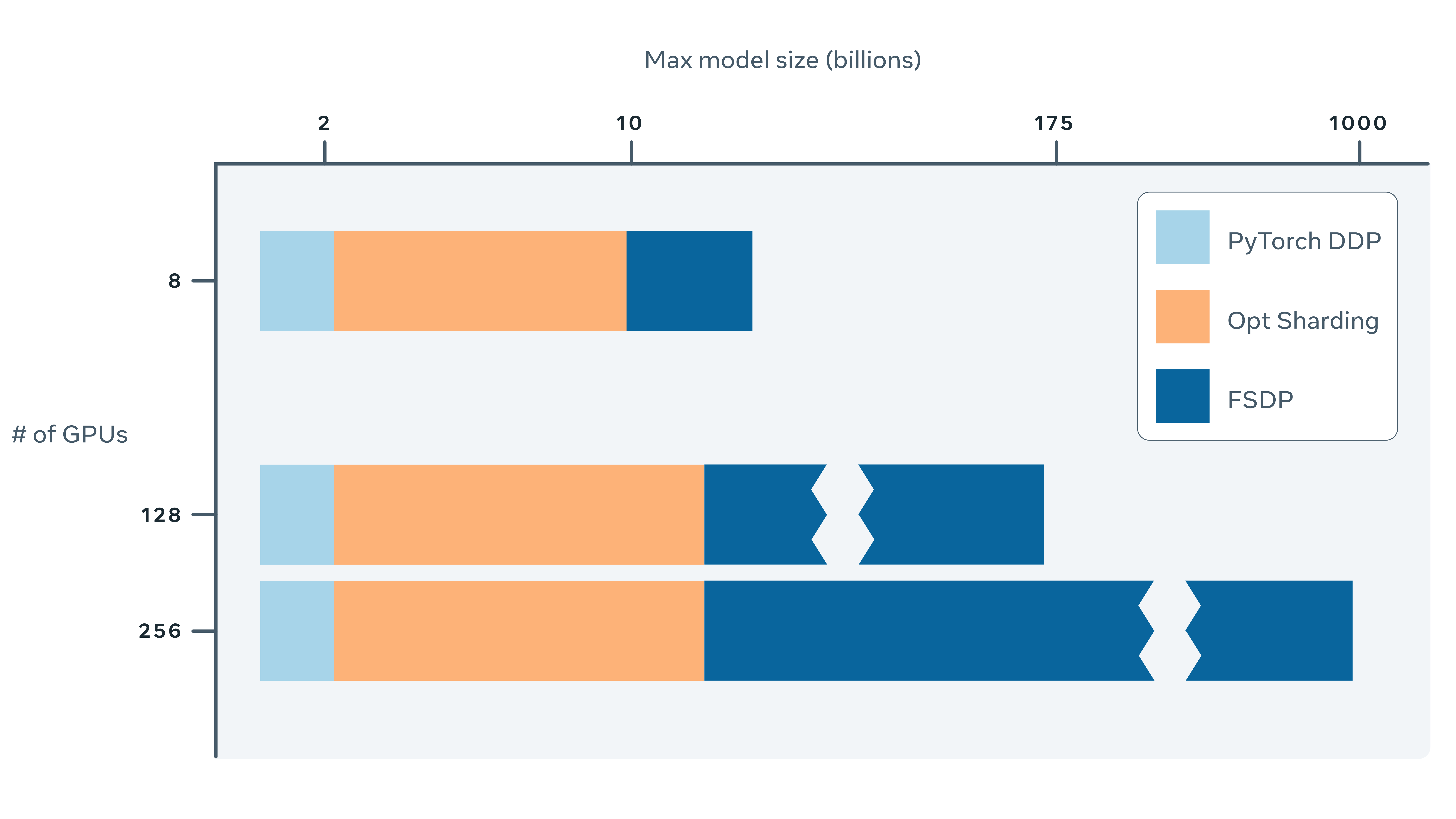

Fast, Terabyte-Scale Recommender Training Made Easy with NVIDIA Merlin Distributed-Embeddings | NVIDIA Technical Blog